There’s been a lot of talk about star schemas and snowflake schemas at SQLbits this week. If you’re not familiar with the terms they relate to two divergent approaches to data that are important to consider when moving to a cloud based reporting solution.

WHAT IS IT?

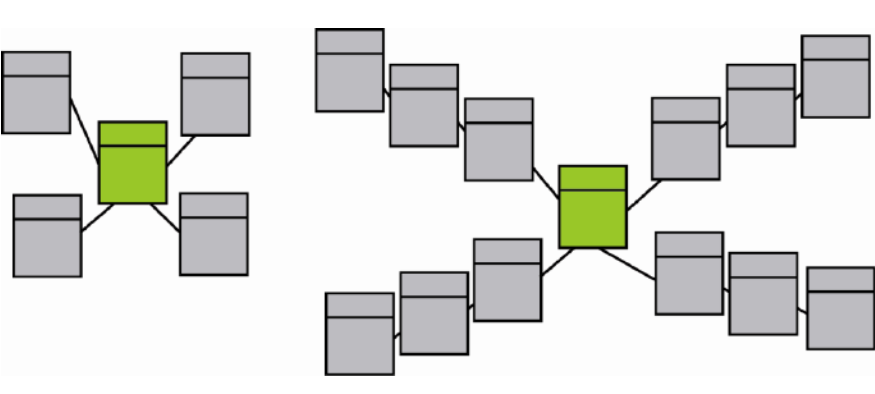

A star schema is a simple and denormalized structure where the fact table is at the center and surrounded by dimension tables. It looks like a star because of its shape.

On the other hand, a snowflake schema is a more normalized structure that breaks down dimension tables into sub-dimension tables. This makes the schema look like a snowflake, hence the name. This allows for better scalability and more complex relationships between tables. However, it can also make querying data more complicated and slower due to the increased number of joins required.

The star schema is simpler and faster for querying data, but less flexible, while the snowflake schema is more flexible and scalable, but can be more complex and slower for querying data. Both of these approaches have a purposes and a strength and in my experience a combination of both approaches is often best.

Snowflake is the standard in older systems, or in highly controlled transactional systems. It used to be taboo to list a value redundantly in two places. Star schemas are more likely to become out of sync, because you have to update values in multiple locations. Over time; however, it’s clear that multiple joins in ad hoc querying is inefficient, especially when we’re reporting on a large amount of data, and particularly in tables that are actively processing transactions.

MODERN REPORTING FOR LEGACY SYSTEMS

Preparing your data for consumption by converting legacy snowflake schema tables to a simplified star schema set of tables that users can query without locking or blocking transactional tables makes the most of both design approaches. Talk to one of our data experts to discuss how your data factory or warehouse resources can be simplified and turned into useful, meaningful business intelligence using modern storage solutions along with an intelligent and efficient approach to design that will optimize scanning and extraction.

You can’t make the most of the modern tools available by simply importing your legacy data into a data lake without some tweaks with the help of a reporting expert, and not just a . Get the advice of someone who understands the pitfalls. Keep your queries efficient to keep your costs low, and watch your data turn into meaningful action.

Email today for help with your data optimization questions.